Enhancing energy flexibility in cluster of buildings through coordinated energy management

This research activity involves Capozzoli Alfonso, Brandi Silvio, Gallo Antonio, Buscemi Giacomo, Savino Sabrina

(see our Collaborations page to find out the main collaborations active on these research topics)

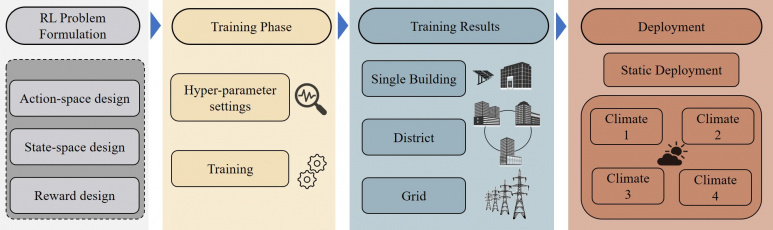

| Objective of the activity: Exploits the potentialities of Deep Reinforcement Learning for district energy management Framework of the activity: District energy management should leverage automated algorithms capable to adapt to a changing environment and to learn from user’s behavior and historical building-related data to optimize, coordinate and control the different actors of the smart grids (e.g., producers, service providers, consumers). However, the computational complexity associated to the district simulation and the application of advanced control strategies limits the application of model-based techniques such as Model Predictive Control (MPC). In this perspective BAEDA lab is conducting research activities aimed at exploiting data-driven control strategy to lighten the computational complexity of the problem. A novel approach exploits the adaptive and potentially model-free nature of Deep Reinforcement Learning (DRL) to coordinate a cluster of buildings.

Figure: Methodological framework for the testing of Deep Reinforcement learning control algorithms at district level Relevant publications on this topic:

|

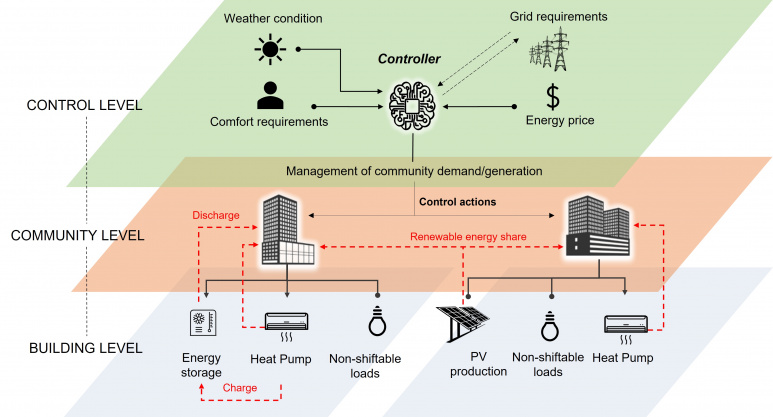

| Objective of the activity: Developing a data-driven framework for advanced control of building energy systems at energy community level. Framework of the activity: The overcoming of the traditional way of producing and consuming energy towards a more sustainable energy management has shifted the need of flexibility from the generation side to the demand side. In this context, the Energy Community is the new paradigm where prosumers can acquire a more active role while interacting with the grid by aggregating their loads and generation profiles. Energy Communities can then be seen as a means for optimizing the energy management in smart grids, with positive effects for the members, who can decrease their energy cost, and for the grid, which can benefit from the provided flexibility. Recent studies have proved how coordinated control architecture for energy management in cluster of buildings is effective at achieving such objective. Nonetheless, the development of control strategies and of digital twins at the district level for testing them is particularly demanding due to high complexity of the control problem and its high computational cost. To cope with these research challenges BAEDA Lab develops generalizable simulation environments for Energy Communities as virtual testbeds for control strategies. The environments are used for the evaluation of advanced control strategies in terms of achievable energy flexibility and energy cost saving for data-driven energy communities, de facto bridging the gap that is currently characterizing the research.

Figure: Framework for advanced control of building energy systems at energy community level Relevant publications on this topic:

|

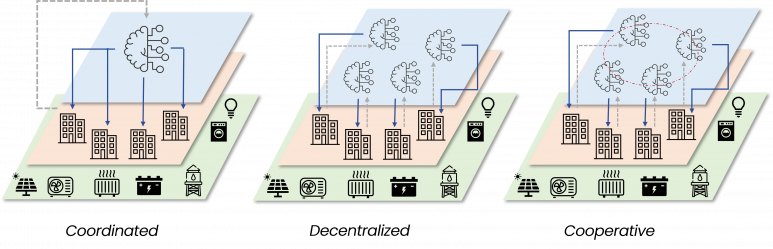

| Objective of the activity: The study aims to identify the advantages and disadvantages of various control architectures in relation to the case study and objective function. Framework of the activity: District Energy Management (DEM) employs different approaches to optimize energy use across buildings, including:

BAEDA Lab is investigating a range of agent-based architectures—including centralized, hierarchical, distributed, and cooperative models with attention mechanisms—to comprehensively assess their strengths and weaknesses for practical implementation.

Figure: Centralized, Decentralized, and Cooperative Architectures of an Energy System Controller in a Cluster of Buildings Relevant publications on this topic:

|

|

Objective of the activity:

Framework of the activity:

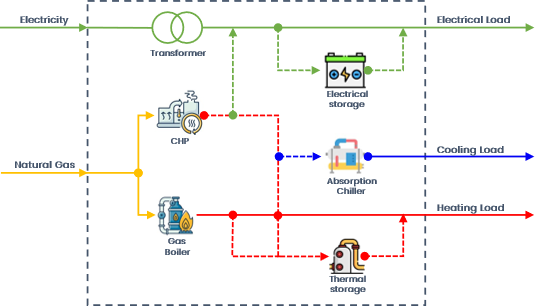

Figure: Layout of an Energy Hub |